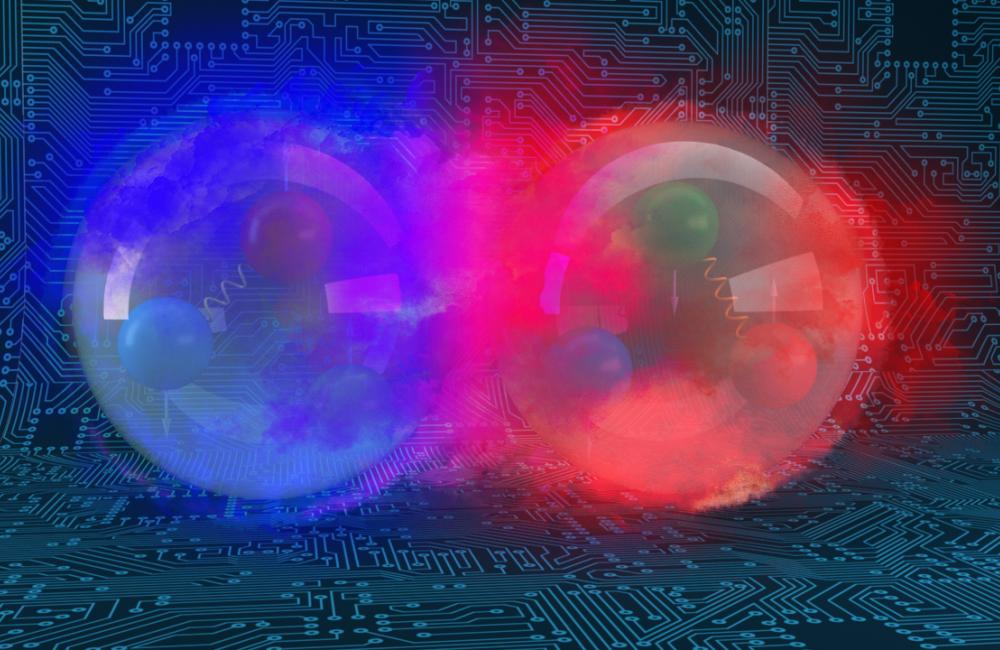

This image of a deuteron shows the bound state of a proton, in red, and a neutron, in blue. Credit: Andy Sproles/ORNL, U.S. Dept. of Energy

Since the 1930s, scientists have been using particle accelerators to gain insights into the structure of matter and the laws of physics that govern our world. These accelerators are some of the most powerful experimental tools available, propelling particles to nearly the speed of light and then colliding them to allow physicists to study the resulting interactions and particles that form.

Many of the largest particle accelerators aim to provide an understanding of hadrons – subatomic particles such as protons or neutrons that are made up of two or more particles called quarks. Quarks are among the smallest particles in the universe, and they carry only fractional electric charges. Scientists have a good idea of how quarks make up hadrons, but the properties of individual quarks have been difficult to tease out because they can’t be observed outside of their respective hadrons.

Using the Summit supercomputer housed at the Department of Energy’s Oak Ridge National Laboratory, a team of nuclear physicists led by Kostas Orginos at the Thomas Jefferson National Accelerator Facility and William & Mary has developed a promising method for measuring quark interactions in hadrons and has applied this method to simulations using quarks with close-to-physical masses. To complete the simulations, the team used a powerful computational technique called lattice quantum chromodynamics, or LQCD, coupled with the computing power of Summit, the nation’s fastest supercomputer. The results were published in Physical Review Letters.

“Typically, scientists have only known a fraction of the energy and momentum of quarks when they’re in a proton,” said Joe Karpie, postdoctoral research scientist at Columbia University and leading author on the paper. “That doesn’t tell them the probability that quark could turn into a different kind of quark or particle. Whereas past calculations relied on artificially large masses to help speed up the calculations, we now have been able to simulate these at very close to physical mass, and we can apply this theoretical knowledge to experimental data to make better predictions about subatomic matter.”

The team’s calculations will complement experiments performed on DOE’s upcoming Electron-Ion Collider, or EIC, a particle collider to be built at Brookhaven National Laboratory, or BNL, that will provide detailed spatial and momentum 3D maps of how subatomic particles are distributed inside the proton.

Understanding the properties of individual quarks could help scientists predict what will happen when quarks interact with the Higgs boson, an elementary particle that is associated with the Higgs field, a field in particle physics theory that gives mass to matter that interacts with it. The method could also be used to help scientists understand phenomena that are governed by the weak force, which is responsible for radioactive decay.

Simulations at the smallest scales

To paint an accurate picture of how quarks operate, scientists typically must average the properties of quarks inside of their respective protons. Using results from collider experiments like the ones at the Relativistic Heavy Ion Collider at BNL, the Large Hadron Collider at CERN or DOE’s upcoming EIC, they can extract out a fraction of a quark’s energy and momentum.

But predicting how much quarks interact with particles such as the Higgs Boson and calculating the full distribution of quark energies and momenta have remained long-standing challenges in particle physics.

Bálint Joó recently joined the staff at the lab’s Oak Ridge Leadership Computing Facility, a DOE Office of Science user facility. To begin tackling this problem, Joó turned to the Chroma software suite for lattice QCD and NVIDIA’s QUDA library. Lattice QCD gives scientists the ability to study quarks and gluons – the elementary glue-like particles that hold quarks together – on a computer by representing space-time as a grid or a lattice on which the quark and gluon fields are formulated. Using Chroma and QUDA (for QCD on CUDA), Joó generated snapshots of the strong-force field in a cube of space-time, weighting the snapshots to describe what the quarks were doing in the vacuum. Other team members then took these snapshots and simulated what would happen as quarks moved through the strong-force field.

“If you drop a quark into this field, it will propagate similarly to how dropping an electric charge into an electric field causes electricity to propagate through the field,” Joó said.

With a grant of computational time from DOE’s Innovative and Novel Computational Impact on Theory and Experiment program, as well as support from the Scientific Discovery through Advanced Computing program and the Exascale Computing Project, the team took the propagator calculations and combined them using Summit to generate final particles that they could then use to extract results from.

“We set what are known as the bare quark masses and the quark-gluon coupling in our simulations,” Joó said. “The actual quark masses, which arise from these bare values, need to be computed from the simulations – for example, by comparing the values of some computed particles to their real-world counterparts, which are experimentally known.”

Drawing from physical experiments, the team knew that the lightest physical particles they were simulating – called the pi mesons, or pions – should have a mass of around 140 megaelectron volts, or MeV. The team’s calculations ranged from 358 MeV down to 172 MeV, close to the experimental mass of pions.

The simulations required the power of Summit because of the number of vacuum snapshots the team had to generate and the number of quark propagators that needed to be calculated on them. To make an estimate of the results at the physical quark mass, calculations needed to be carried out at three different masses of quarks and extrapolated to the physical one. In total, the team used more than 1,000 snapshots over three different quark masses in cubes with lattices ranging from 323 to 643 points in space.

“The closer the masses of the quarks in the simulation are to reality, the more difficult the simulation,” Karpie said. “The lighter the quarks are, the more iterations are required in our solvers, so getting to the physical quark masses has been a major challenge in QCD.”

Algorithmic advances bring new opportunities

Joó, who has been using the Chroma code on OLCF systems since 2007, said that improvements in algorithms over the years have contributed to the ability to run simulations at the physical mass.

“Algorithmic improvements like multigrid solvers and their implementations in efficient software libraries such as QUDA, combined with hardware that can execute them, have made these kinds of simulations possible,” he said.

Although Chroma is his bread-and-butter code, Joó said advances in code development will continue to provide opportunities to target new challenge problems in particle physics.

“Despite having worked with this same code all these years, new things still happen under the hood,” he said. “There will always be new challenges because there will always be new machines, new GPUs, and new methods that we will be able to take advantage of.”

In future studies, the team plans to explore gluons as well as obtain a full 3D image of the proton with its various components.

This research was funded by DOE’s Office of Science.

UT-Battelle manages Oak Ridge National Laboratory for DOE’s Office of Science, the single largest supporter of basic research in the physical sciences in the United States. DOE’s Office of Science is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science.