Achievement: Researchers from Oak Ridge National Laboratory (ORNL), in collaboration with researchers from Duke University, have developed an unsupervised machine learning method, NashAE, for effective disentanglement of latent representations. The research is motivated by an ORNL-specific scientific machine learning application – accelerator beam generation at SNS. Simulation results demonstrate that NashAE is highly effective in identifying the dimension and type of latent space in synthesized accelerator beam data.

Significance and Impact:

Disentanglement of latent space features is the machine learning technique of learning unique data representations that are each only sensitive to independent factors of the underlying data distribution. A disentangled representation is an inherently interpretable representation, where each disentangled unit has a consistent, unique, and independent interpretation of the data across its domain. Learning disentangled latent space is a fundamental machine learning problem. An effective solution has a great potential to address the lack of explainability of deep learning models. It also provides a technique to assess and quantify robustness of deep learning models. The existing unsupervised methods still requires considerable amount of prior knowledge about the latent space, such as number and forms of disentangled factors.

The new method, NashAE, discovers a sparse and disentangled latent space using the standard auto-encoder (AE) without prior knowledge on the number or distribution of underlying data generating factors. The core intuition behind the approach is to reduce the redundant information between latent encoding elements, regardless of their distribution. To accomplish this, NashAE reduces the information between encoded continuous and/or discrete random variables using just access to samples drawn from the unknown underlying distributions.

Besides applications in AI explainability and AI robustness, effective disentanglement methods such as NashAE can also have applications in scientific machine learning topics such as efficient representation learning and surrogate modeling.

Research Details

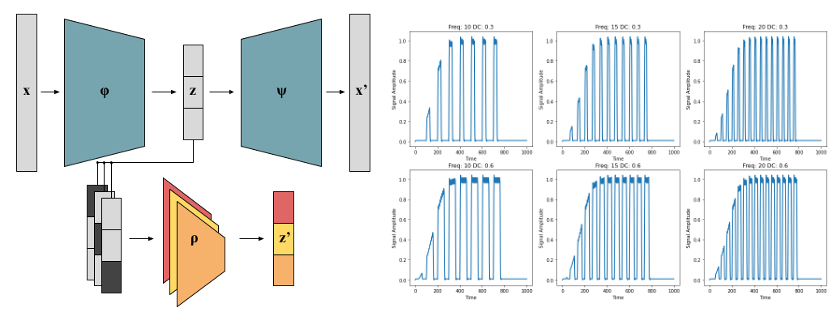

- NashAE is based on auto-encoder (AE). An example of the latent space with dimensionality m=3 is illustrated in Fig 1. An ensemble of m independently trained regression networks ρ takes m duplicates of z which each have an element removed, and each independent regression network tries to predict the value of its missing element using knowledge of the other elements.

- The loss function for the training is defined as the combination of reconstruction loss, L_R, which describes the quality of the AE reconstruction, and the adversarial loss L_A, which describes the covariance of different z′

- The NashAE model is trained by minibatch stochastic gradient descent

Sponsor/Funding: DOE ASCR (DnC2S)

PI and affiliation: Frank Liu, Computer Science and Mathematics Division, ORNL

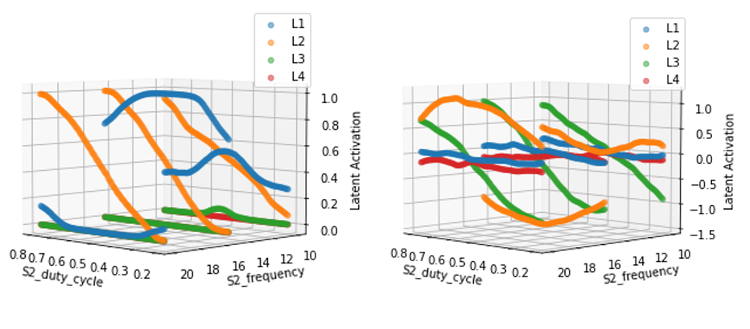

Summary: We have developed NashAE, a new adversarial method to disentangle factors of variation which makes minimal assumptions on the number and form of factors to extract. We have shown that the method leads to a more statistically independent and disentangled AE latent space. Quantitative simulation results indicate that this flexible method is more reliable in retrieving the true number of data generating factors and has a higher capacity to align its latent representations with salient data characteristics.