Achievement:

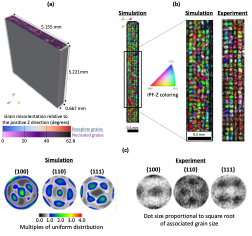

Researchers associated with the ExaAM project, a part of the Exascale Computing Project, developed ExaCA, a cellular automata (CA)-based model for grain-scale alloy solidification capable of simulation on both CPU and GPU architectures. This was accomplished by rewriting a previous CA model that used only MPI for parallelization on CPUs (MPI-CA) using the Kokkos library, enabling performance-portable simulation on CPU (with and without threading) and GPU with focus on the resources of the Oak Ridge Leadership Computing Facility. The necessary steps to convert MPI-CA code into a performant Kokkos-based code included adding atomic operations, minimization of thread divergence, and optimizing for GPU communication. Performance is benchmarked on Summit for directional solidification and additive manufacturing (AM)-based test problems, where ExaCA GPU performance generally exceeds CPU performance of the original, MPI-only CA code by a factor of 10 for node-level comparisons. Further, GPU-specific code optimizations did not significantly reduce CPU performance across AM problems. A large-scale, billion-cell AM microstructure simulation was performed, with quantitative agreement on grain size and shape distribution and qualitative agreement on several aspects of texture as compared to experimental data from a corresponding AM build. In addition to the journal article published, ExaCA was released as open source to the scientific community.

Significance and Impact:

Researchers developed ExaCA, a grain-scale application for alloy microstructure prediction that is capable of performant simulation on CPU and GPU hardware from multiple vendors, including both Summit and Crusher (pre-Frontier OLCF test bed). The CPU to GPU speedup of around 10x for AM-based test problems significantly increases the number and total size of solidification simulations that are possible. This ensures that ExaCA is versatile enough to simulate both small problems on a laptop and large problems on Frontier. A large-scale AM-based simulation consisting of billions of CA cells (making it among the largest CA calculations for AM solidification) ran on only four nodes of Summit GPUs in 8.5 hours with results that compared favorably to an AM benchmark build performed at NIST, showcasing the ability of ExaCA to accurately simulate complex microstructures using a fraction of the compute time and resources previously required. Because ExaCA is open source, other researchers can now use the code to model diverse AM processes.

Research Details

- ExaCA was developed starting from an MPI-based, C++ code for grain scale alloy solidification, and converting the data structures and algorithms into Kokkos-supported functionality for parallelism and data access on both CPU and GPU. Performance changes relative to the baseline, MPI-only code were documented for each step in development

- Performance comparisons were made on Summit for ExaCA using the Serial (CPU), OpenMP (CPU), and Cuda (GPU) performance to the baseline, MPI-only code for both directional solidification and laser raster-based AM test problems

- Additional test problems were used to examine the impact of MPI domain decomposition, thread scalability, CPU and GPU timing breakdown by kernel, as well as strong and weak scaling

- A large-scale multilayer AM-based problem was simulated on GPUs to validate ExaCA’s microstructure prediction against characterization results from an AM benchmark experiment

Facility: ExaCA was tested and run on resources at the Oak Ridge Leadership Computing Facility (OLCF), particularly Summit and Crusher (pre-Frontier).

Sponsor/Funding: Exascale Computing Project

PI and affiliation: John Turner, Computational Engineering Program Director, ORNL, and Jim Belak, Senior Scientist, LLNL

Team: Matt Rolchigo, Samuel Temple Reeve, Benjamin Stump, Gerald L. Knapp, John Coleman, Alex Plotkowski (ORNL), Jim Belak (LLNL).

Citation and DOI: M. Rolchigo, S.T. Reeve, B. Stump, G.L. Knapp, J. Coleman, A. Plotkowski, and J. Belak. ExaCA: a performance portable exascale cellular automata application for alloy solidification modeling, Comput. Mater. Sci., 214, 111692 (2022); DOI: https://doi.org/10.1016/j.commatsci.2022.111692

Summary: An exascale capable, cellular automata-based model of as-solidified alloy grain structure (ExaCA) was developed as part of the ExaAM project, an initiative within the Exascale Computing Project (ECP) to develop, test, and optimize an exascale-capable coupled and self-consistent model of AM parts. The CA-based code was parallelized using MPI and Kokkos, the latter enabling simulation across hardware within a single-source implementation. Testing on Summit CPUs and GPUs showed comparable CPU performance to a baseline, MPI-only CA code and a 5-20x speedup when running AM-based test problems using GPUs. The improved performance of CA through GPU utilization and the performance portable nature of ExaCA will enable accurate part-scale modeling of complex process-microstructure relationships by harnessing current and future generations of high-performance computing resources.

Reference:

[1]: M. Stoudt et al. “Location-specific microstructure characterization within IN625 additive manufacturing benchmark test artifacts”. In: Integrating Materials and Manufacturing Innovation (online) (2020). Accessed: 2021-12-09. url: https://tsapps.nist.gov/publication/get_pdf.cfm?pub_id=928265.