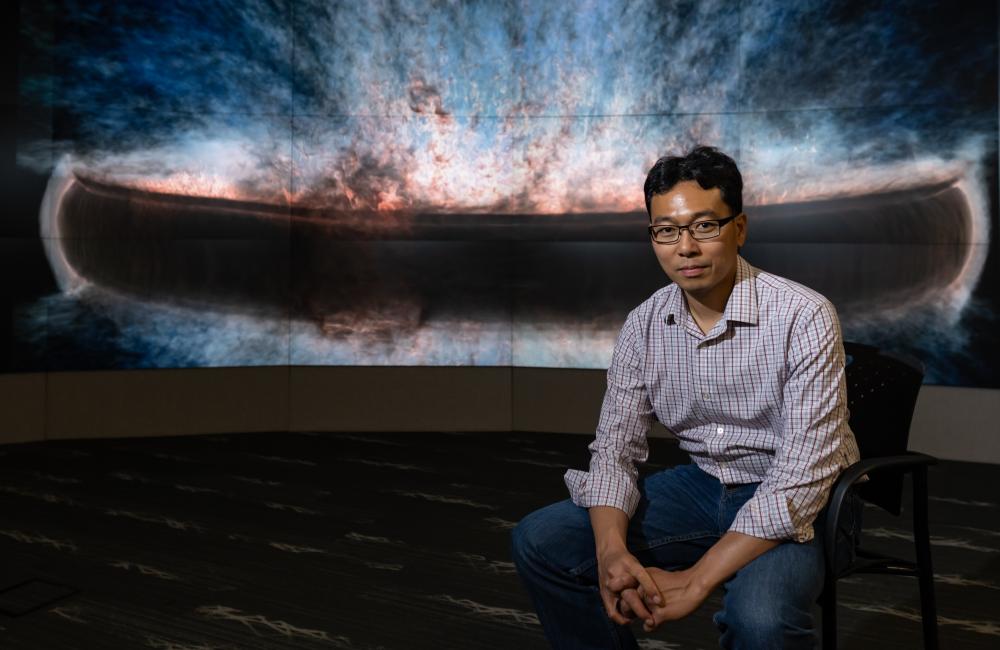

Reuben Budiardja, an Oak Ridge National Laboratory computational scientist, worked with the early users who helped prepare Frontier, the world’s first exascale supercomputer, for scientific operations. Credit: Carlos Jones/ORNL, U.S. Dept. of Energy

With the world’s first exascale supercomputer now fully open for scientific business, researchers can thank the early users who helped get the machine up to speed.

Frontier set a new record for computational power when the HPE Cray EX supercomputer at the Department of Energy’s Oak Ridge National Laboratory debuted in May 2022 at 1.1 exaflops, or 1.1 quintillion calculations per second. That’s a billion billion operations, equal to the entire population of Earth working in concert for more than four years.

Frontier opened to full operations in April 2023, granting more than 1,200 scientific users access to its circuits to grapple with more than 160 problems that range from designing nuclear fusion reactors to modeling potential cures for cancer to unraveling the origins of the universe. But the big machine wouldn’t be ready so soon if not for the work of the Oak Ridge Leadership Computing Facility’s Center for Accelerated Application Readiness, or CAAR, which enlisted eight research teams to test-drive the system and work out the kinks.

CAAR brought together scientific users, OLCF computing experts, vendors and developers to work through software applications and figure out how to wring the best possible performance from the system. For the teams, the program offers a head start at solving their grand challenges and learning how to adapt their project codes to the system’s architecture; for the OLCF and vendors, it’s a heads-up on which tweaks to make so the system works as needed.

“The early users’ help has been critical in preparing Frontier,” said ORNL’s Reuben Budiardja, a computational scientist who worked with the early users and presented a paper on the efforts at the International Supercomputing Conference 2023 in May. “They’ve helped us solve many early software issues and get the hardware running at full capacity. It’s been a partnership to ready Frontier for production and lay a scientific foundation as we go.”

CAAR’s origin dates back two supercomputing generations to Titan, the OLCF’s 27-petaflop Cray XK7 system that marked a breakthrough in high-performance computing by combining CPUs, which act as the machine’s brains, with GPUs, which help lighten the computational load for the CPUs and kick processing speeds into high gear while keeping energy demands down. CAAR teams have helped test every OLCF supercomputer since then: Summit, which previously held the title of world’s fastest computer at 200 petaflops, or 200 quadrillion calculations per second, and now Frontier.

The eight teams that took part in CAAR for Frontier were:

- Cholla, led by the University of Pittsburgh’s Evan Schneider, which seeks to simulate a galaxy comparable to the Milky Way and model the formation of stars.

- Combinatorial Metrics, or CoMet, led by ORNL’s Dan Jacobson, which seeks to model complex biological systems for everything from bioenergy research to clinical genomics.

- GPUs for Extreme-Scale Turbulence Simulations, or GESTS, led by Georgia Tech’s P. K. Yeung, which seeks to simulate the turbulent fluid flows found in ocean dynamics, astrophysics, combustion and the dispersal of pollution through the atmosphere.

- Lattice Boltzmann Methods for Porous Media, or LBPM, led by Virginia Tech’s James Edward McClure, which seeks to simulate the behavior of fluids within rock formations.

- Locally Self-Consistent Multiple Scattering, or LSMS, led by ORNL’s Markus Eisenbach, which seeks to simulate the atomic structures of condensed matter systems at full scale.

- Nanoscale Molecular Dynamics, or NAMD, led by the University of Illinois at Urbana-Champaign’s Emad Tajkhorshid, which seeks to simulate large biological systems such as the Zika virus.

- Nuclear-Coupled Cluster Oak Ridge, or NuCCOR, led by Michigan State University’s Morten Hjorth-Jensen, which seeks to simulate complex nuclear phenomena such as fission reactions.

- Particle-in-Cell on Graphics Processing Units, or PIConGPU, led by the University of Delaware’s Sunita Chandrasekaran, which seeks to simulate the complex plasma dynamics necessary to develop advanced particle accelerators.

The studies conducted by these teams require simulations beyond the capability of any supercomputer prior to Frontier. Cholla, for example, seeks to model a roughly 20,000-parsec galaxy, complete with stars and other astronomical phenomena. Just 1 parsec equals about 30 trillion kilometers.

“We want to run this simulation for billions of years,” said Schneider, who leads the Cholla team. “Until a machine like Frontier existed, this wasn’t feasible. We want to be able to model how this galaxy like ours, which is basically a disc made up of gas and dust and stars that continually collapses and forms new stars, behaves over long periods of time so we can compare it to observational data and see how the physics play out in order to better understand how the galaxy we live in works.”

The challenges presented by Cholla and the other projects made for an ideal stress test, Budiardja said.

“Each one of these has helped us find ways to exploit Frontier’s unique architecture for better performance,” he said. “Everyone who uses Frontier will benefit from these early efforts.”

Support for this research came from the DOE Office of Science’s Advanced Scientific Computing Research program and from DOE’s Exascale Computing Project. The OLCF is a DOE Office of Science user facility at ORNL.

UT-Battelle manages ORNL for DOE’s Office of Science, the single largest supporter of basic research in the physical sciences in the United States. The Office of Science is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science. — Matt Lakin