OAK RIDGE, Tenn., June 19, 2018 – In an effort to reduce errors in the analyses of diagnostic images by health professionals, a team of researchers from the Department of Energy’s Oak Ridge National Laboratory has improved understanding of the cognitive processes involved in image interpretation.

The work, published in the Journal of Medical Imaging, has potential to improve health outcomes for the hundreds of thousands of American women affected by breast cancer each year. Breast cancer is the second leading cause of death in women and early detection is critical for effective treatment. Catching the disease early requires an accurate interpretation of a patient’s mammogram; conversely, a radiologist’s misinterpretation of a mammogram can have enormous consequences for a patient’s future.

The ORNL-led team, which included Gina Tourassi, Hong-Jun Yoon and Folami Alamudun, as well as Paige Paulus of the University of Tennessee’s Department of Mechanical, Aerospace, and Biomedical Engineering, found that analyses of mammograms by radiologists were significantly influenced by context bias, or the radiologist’s previous diagnostic experiences.

While new radiology trainees were most susceptible to the phenomenon, the team found that even more experienced radiologists fall victim to some degree.

“These findings will be critical in the future training of medical professionals to reduce errors in the interpretations of diagnostic imaging and will inform the future of human and computer interactions going forward,” said Gina Tourassi, team lead and director of ORNL’s Health Data Science Institute.

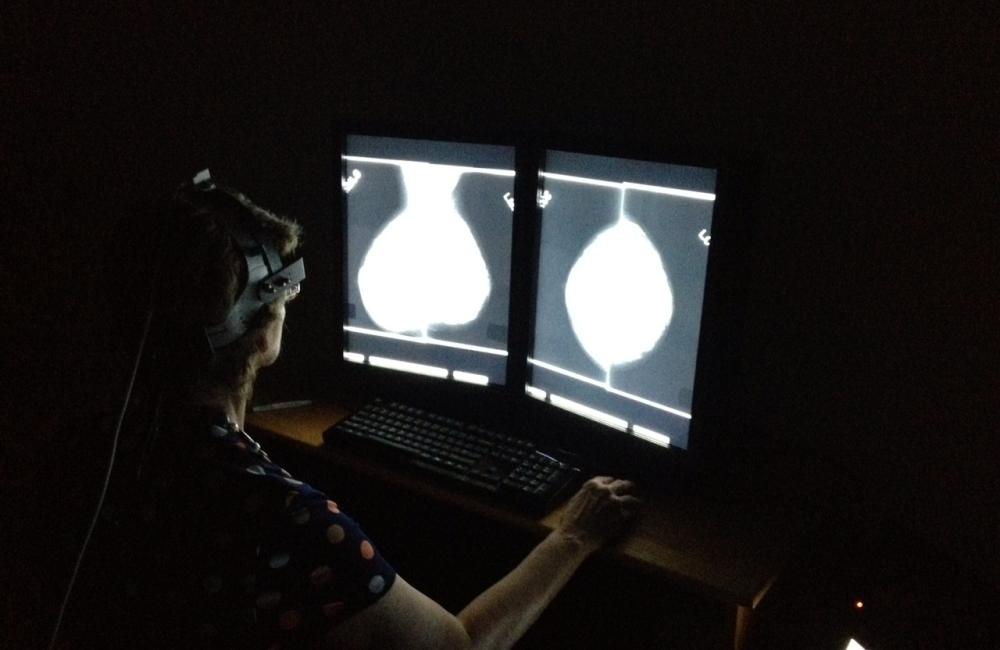

The research required the design of an experiment aimed at following the eye movements of radiologists at various skill levels to better understand the context bias involved in their individual interpretations of the images.

The experiment, designed by Yoon, followed the eye movements of three board certified radiologists and seven radiology residents as they analyzed 100 mammographic studies from the University of South Florida’s Digital Database for Screening Mammography. The 400 images, representing a mix of cancer, no cancer, and cases that mimicked cancer but were benign, were specifically selected to cover a range of cases similar to that found in a clinical setting.

The participants, who were grouped by levels of experience and had no prior knowledge of what was contained in the individual X-rays, were outfitted with a head-mounted eye-tracking device designed to record their “raw gaze data,” which characterized their overall visual behavior. The study also recorded the participants’ diagnostic decisions via the location of suspicious findings along with their characteristics according to the BI-RADS lexicon, the radiologists’ reporting scheme for mammograms.

By computing a measure known as a fractal dimension on the individual participants’ scan path (map of eye movements) and performing a series of statistical calculations, the researchers were able to discern how the eye movements of the participants differed from mammogram to mammogram.

They also calculated the deviation in the context of the different image categories, such as images that show cancer and those that may be easier or more difficult to decipher.

The findings show great promise for the future of personalized medical decision support and extend earlier work for which the team received an R&D 100 Award.

But the experiment was only half the accomplishment; effectively tracking the participants’ eye movements meant employing real-time sensor data, which logs nearly every movement of the participants’ eyes. And with 10 observers interpreting 100 cases, it’s not long before the data started adding up.

Managing such a data-intensive task manually would have been impractical, so the researchers turned to artificial intelligence to help them efficiently and effectively make sense of the results. Fortunately, they had access to ORNL’s Titan supercomputer, one of the country’s most powerful systems. With Titan, the team was able to rapidly train the deep learning models necessary to make sense of the large datasets. Similar studies in the past have used aggregation methods to make sense of the enormous data sets, but the ORNL team processed the full data sequence, a critical task as over time this sequence revealed differentiations in the eye paths of the participants as they analyzed the various mammograms.

In a related paper published in the Journal of Human Performance in Extreme Environments, the team demonstrated how convolutional neural networks, a type of AI commonly applied to the analysis of images, significantly outperformed other methods, such as deep neural networks and deep belief networks, in parsing the eye tracking data and, by extension, validating the experiment as a means to measure context bias.

Furthermore, while the experiment focused on radiology, the resulting data drove home the need for “intelligent interfaces and decision support systems” to assist human performance across a range of complex tasks including air-traffic control and battlefield management.

While machines won’t replace radiologists (or other humans involved in rapid, high-impact decision-making) any time soon, said Tourassi, they do have enormous potential to assist health professionals and other decision makers in reducing errors due to phenomena such as context bias.

“These studies will inform human/computer interactions going forward as we use AI to augment and improve human performance,” she said.

The research was supported by ORNL’s Laboratory Directed Research and Development program.

This work leveraged the Oak Ridge Leadership Computing Facility, a DOE Office of Science user facility.

UT-Battelle manages ORNL for DOE’s Office of Science. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States, and is working to address some of the most pressing challenges of our time. For more information, please visit http://energy.gov/science/.