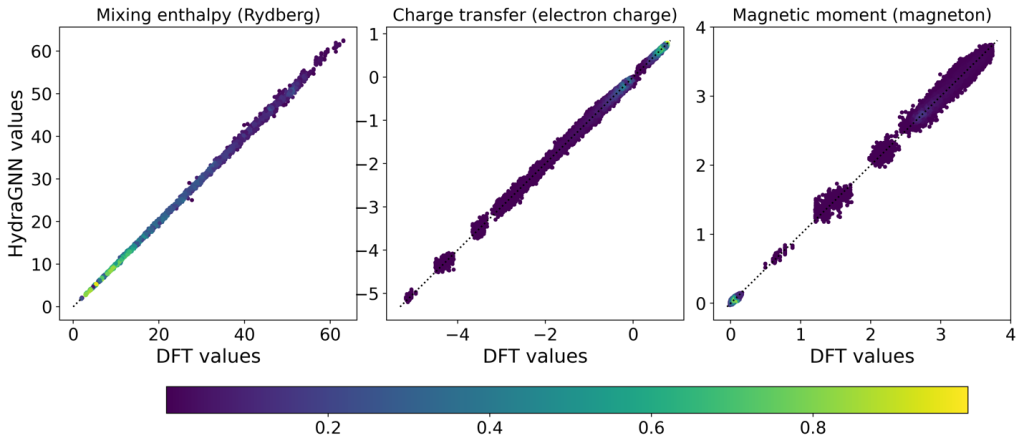

Researchers from the Computing and Computational Sciences Directorate (CCSD) at Oak Ridge National Laboratory (ORNL) have developed a distributed PyTorch implementation of multi-headed graph convolutional neural networks (GCNNs) to produce fast and accurate predictions of graph properties [2]. The Artificial Intelligence for Scientific Discovery (AISD) Thrust of the Artificial Intelligence Initiative at ORNL has funded this effort to produce fast and accurate predictions of material properties using atomic information. Within this context, GCNNs abstract the lattice structure of a solid material as a graph, whereby atoms are modeled as nodes and metallic bonds as edges. This representation naturally incorporates information about the structure of the material, thereby eliminating the need for computationally expensive data pre-processing which is required in standard neural network (NN) approaches. We trained GCNNs on ab-initio density functional theory (DFT) for copper-gold (CuAu) [2] and iron-platinum (FePt) [3] data generated by running the LSMS-3 code, which implements a locally self-consistent multiple scattering method, on OLCF supercomputers Titan and Summit. GCNN outperforms the ab-initio DFT simulation by orders of magnitude in terms of computational time to produce the estimate of the total energy, charge transfer, and magnetic moment for a given atomic configuration of the lattice structure. We compared the predictive performance of GCNN models against a standard NN such as dense feedforward multi-layer perceptron (MLP) by using the root-mean-squared errors to quantify their predictive quality. We found that the attainable accuracy of GCNNs is at least an order of magnitude better than that of the MLP.

Albeit the code has been originally developed in response to the scientific needs addressed by the AISD Thrust of the AI Initiative, its framework is general enough to support other scientific applications at ORNL such as transportation, power grid, and cybersecurity.

This research was funded by the AI Initiative, as part of the Laboratory Directed Research and Development Program of Oak Ridge National Laboratory, managed by UT-Battelle, LLC, for the U.S. Department of Energy (DOE).