The Science

ORNL researchers developed a novel nonlinear level set learning method to reduce dimensionality in high-dimensional function approximation. This method:

- Develops reversible neural networks (RevNets) and pseudo-reversible neural networks (PRNNs) to handle level sets with different types of geometries.

- Introduces new loss functions to train nonlinear transformations, i.e., RevNets or PRNNs, to learn low-dimensional parameterization of the level sets.

The Impact

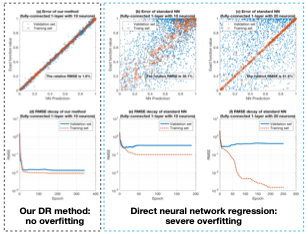

- This method significantly alleviates the overfitting issue when approximating a high-dimensional function with limited data (see Figure 1).

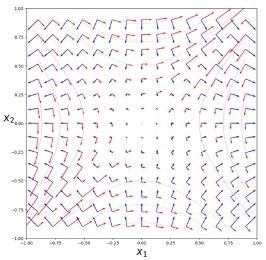

- The method successfully learns level sets of functions with critical points, such as saddle points, in the input domain (see Figure 2).

Team Members: Guannan Zhang, Jacob Hinkle, Jiaxin Zhang (all of ORNL), Yuankai Teng, Zhu Wang, Lili Ju (all of the University of South Carolina), Anthony Gruber (Florida State University).

Publication(s) for this work:

- G. Zhang, J. Hinkle, J. Zhang, “Learning nonlinear level sets for dimensionality reduction in function approximation.” Advances in Neural Information Processing Systems, 32, 2019.

- Y. Teng, et al., “Leve set learning with pseudo-reversible neural networks for nonlinear dimension reduction in function approximation.” Submitted to SIAM Journal on Scientific Computing (https://arxiv.org/pdf/2112.01438.pdf)

Summary

One of major uncertainty resources in building predictive models is the limited information (i.e., training data) when the input space is high-dimensional. In this case, an effective way to reduce uncertainty is to reduce the dimension of the input space. The question the team tried to answer is how to effectively reduce the dimension of a function with independent input variables. The researchers developed two types of nonlinear level set learning models during the project. In FY20, they developed a RevNet-based level set learning method, where the key novelty is the definition of the new loss function for training the RevNets. The RevNet-based method provided high performance (see Figure 1), but the team found that it cannot handle functions with critical points, such as the saddle points shown in Figure 2. In FY21, the researchers improved their method by replacing the RevNet with a PRNN. Since PRNN is not reversible, they added a new regularization term in the loss to encourage the pseudo reversibility. With the use of the PRNN, this method can learn level sets that have critical points, which has not been achieved by state-of-the art dimension reduction methods.

Acknowledgement of Support

This material was based upon work supported by the U.S. Department of Energy, Office of Science, Office of Advanced Scientific Computing Research, Applied Mathematics program under contract and award numbers ERKJ352 at Oak Ridge National Laboratory (ORNL). ORNL is operated by UT- Battelle, LLC., for DOE under Contract DE-AC05-00OR22725.