The Science

Deep Markov Models (DMMs) are generalizations of Hidden Markov Models for learning generative models of complex, high-dimensional dynamic systems. Despite the popularity of DMMs, theoretical properties such as their robustness and the stability of generated trajectories remain open. A study produced by a team from ORNL and Pacific Northwest National Laboratory:

- proposes stability analysis methods based on operator norms of DMMs;

- provides sufficient conditions for DMMs by leveraging the Banach fixed-point theorem; and

- introduces a set of methods for designing provable DMMs.

The Impact

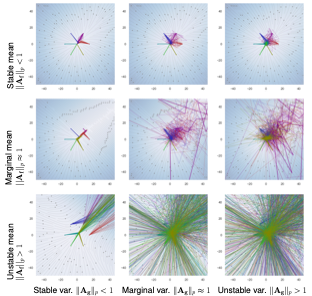

The team conducted numerical studies to demonstrate the connection between the parameters of neural networks and the stochastic stability of DMMs. Ongoing efforts will apply the theoretical work of control and modeling of dynamic systems to real-world scenarios.

Publication(s) for this work:

- J. Drgona, S. Mukherjee, J. Zhang, F. Liu, and M. Halappanavar, “On the Stochastic Stability of Deep Markov Models.” Conference on Neural Information Processing Systems, December 6-14, 2021

Team Members: Jiaxin Zhang, Frank Liu (ORNL), Jan Drgona, Sayak Mukherjee, Mahantesh Halappanavar (PNNL)

Summary

Deep Markov Models (DMMs) are generative models that are scalable and expressive generalizations of Hidden Markov Models used for representation, learning, and inference problems. However, the fundamental stochastic stability guarantees of such models have not been thoroughly investigated. This work provides sufficient conditions of DMM’s stochastic stability as defined in the context of dynamic systems and proposes a stability analysis method based on the contraction of probabilistic maps modeled by deep neural networks. The work establishes connections between the spectral properties of weights in neural networks and the effects different types of activation functions have on the stability and overall dynamic behavior of DMMs under Gaussian distributions. Additionally, the team proposes a few practical methods for designing constrained DMMs with guaranteed stability.

Acknowledgement of Support

This material was based upon work supported by the U.S. Department of Energy, Office of Science, Office of Advanced Scientific Computing Research, Applied Mathematics program under contract and award number KJ0401 at Oak Ridge National Laboratory (ORNL) and under contract and award number 76815 at Pacific Northwest National Laboratory (PNNL). ORNL is operated by UT- Battelle, LLC., for DOE under Contract DE-AC05-00OR22725. PNNL is operated by Battelle Memorial Institute for DOE under Contract No. DE-AC05-76RL01830.