Filter News

Area of Research

- Biological Systems (1)

- Biology and Environment (19)

- Computational Biology (1)

- Electricity and Smart Grid (1)

- Energy Science (36)

- Fusion and Fission (4)

- Isotopes (4)

- Materials (13)

- Materials for Computing (4)

- National Security (5)

- Neutron Science (12)

- Nuclear Science and Technology (1)

- Quantum information Science (4)

- Supercomputing (36)

News Type

News Topics

- (-) Biomedical (44)

- (-) Energy Storage (37)

- (-) Frontier (45)

- (-) Grid (34)

- (-) Hydropower (6)

- (-) Molten Salt (2)

- (-) Polymers (12)

- (-) Quantum Science (49)

- 3-D Printing/Advanced Manufacturing (60)

- Advanced Reactors (13)

- Artificial Intelligence (79)

- Big Data (50)

- Bioenergy (70)

- Biology (82)

- Biotechnology (26)

- Buildings (35)

- Chemical Sciences (37)

- Clean Water (17)

- Composites (12)

- Computer Science (117)

- Coronavirus (20)

- Critical Materials (6)

- Cybersecurity (14)

- Education (2)

- Emergency (3)

- Environment (124)

- Exascale Computing (51)

- Fossil Energy (6)

- Fusion (40)

- High-Performance Computing (82)

- Isotopes (35)

- ITER (4)

- Machine Learning (40)

- Materials (53)

- Materials Science (64)

- Mathematics (9)

- Mercury (7)

- Microelectronics (3)

- Microscopy (27)

- Nanotechnology (21)

- National Security (63)

- Neutron Science (85)

- Nuclear Energy (71)

- Partnerships (36)

- Physics (39)

- Quantum Computing (35)

- Security (18)

- Simulation (44)

- Software (1)

- Space Exploration (13)

- Statistics (2)

- Summit (40)

- Transportation (35)

Media Contacts

ORNL welcomed attendees to the inaugural Southeastern Quantum Conference, held Oct. 28 – 30 in downtown Knoxville, to discuss innovative ways to use quantum science and technologies to enable scientific discovery.

Seven scientists affiliated with ORNL have been named Battelle Distinguished Inventors in recognition of being granted 14 or more United States patents. Since Battelle began managing ORNL in 2000, 104 ORNL researchers have reached this milestone.

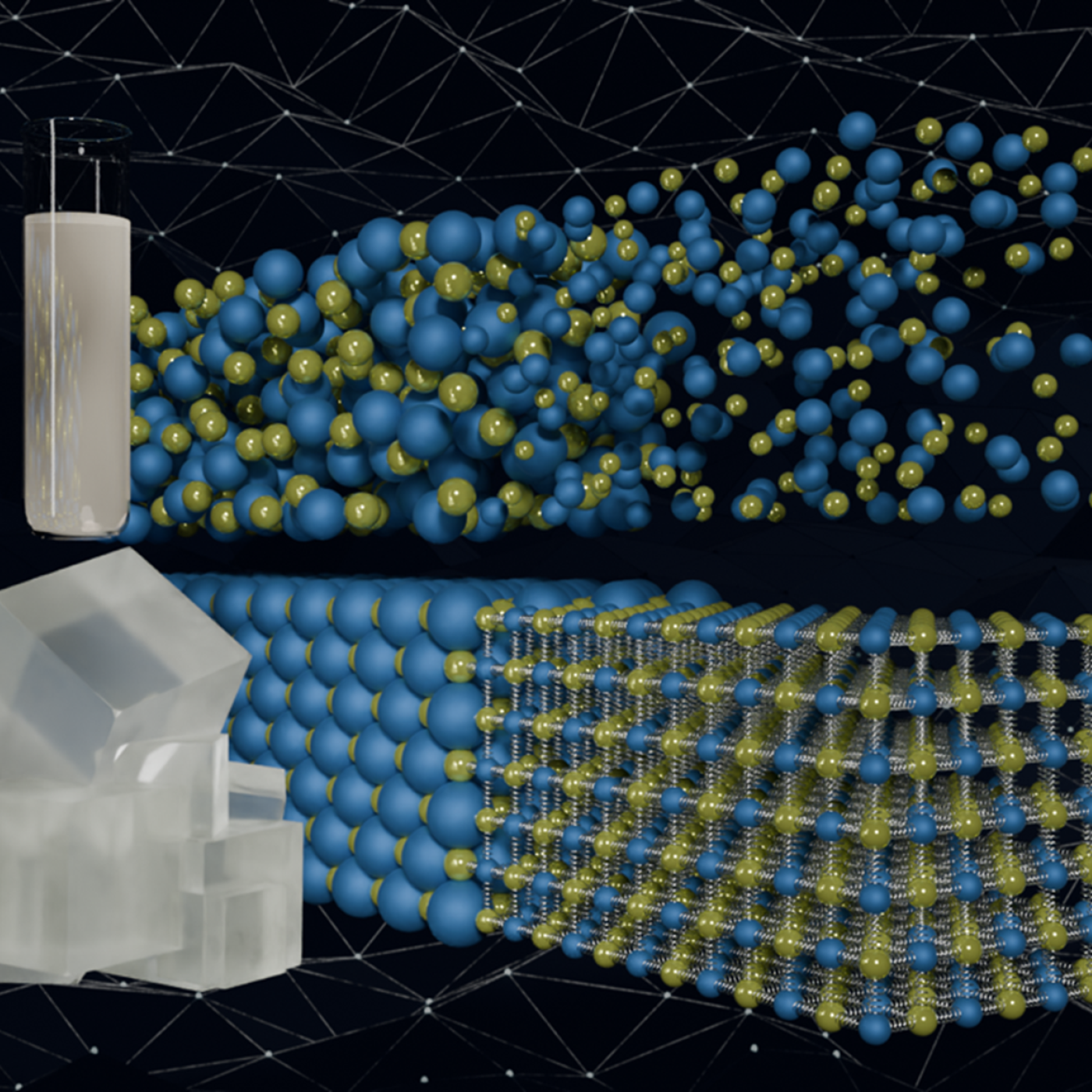

Electrolytes that convert chemical to electrical energy underlie the search for new power sources with zero emissions. Among these new power sources are fuel cells that produce electricity.

In early November, researchers at the Department of Energy’s Argonne National Laboratory used the fastest supercomputer on the planet to run the largest astrophysical simulation of the universe ever conducted. The achievement was made using the Frontier supercomputer at Oak Ridge National Laboratory.

ORNL has been recognized in the 21st edition of the HPCwire Readers’ and Editors’ Choice Awards, presented at the 2024 International Conference for High Performance Computing, Networking, Storage and Analysis in Atlanta, Georgia.

Two-and-a-half years after breaking the exascale barrier, the Frontier supercomputer at the Department of Energy’s Oak Ridge National Laboratory continues to set new standards for its computing speed and performance.

The Department of Energy’s Quantum Computing User Program, or QCUP, is releasing a Request for Information to gather input from all relevant parties on the current and upcoming availability of quantum computing resources, conventions for measuring, tracking, and forecasting quantum computing performance, and methods for engaging with the diversity of stakeholders in the quantum computing community. Responses received to the RFI will inform QCUP on both immediate and near-term availability of hardware, software tools and user engagement opportunities in the field of quantum computing.

Researchers used the world’s fastest supercomputer, Frontier, to train an AI model that designs proteins, with applications in fields like vaccines, cancer treatments, and environmental bioremediation. The study earned a finalist nomination for the Gordon Bell Prize, recognizing innovation in high-performance computing for science.

Researchers at Oak Ridge National Laboratory used the Frontier supercomputer to train the world’s largest AI model for weather prediction, paving the way for hyperlocal, ultra-accurate forecasts. This achievement earned them a finalist nomination for the prestigious Gordon Bell Prize for Climate Modeling.

A research team led by the University of Maryland has been nominated for the Association for Computing Machinery’s Gordon Bell Prize. The team is being recognized for developing a scalable, distributed training framework called AxoNN, which leverages GPUs to rapidly train large language models.