The Science

A research team from ORNL, Pacific Northwest National Laboratory, and Arizona State University has developed a novel method to detect out-of-distribution (OOD) samples in continual learning without forgetting the learned knowledge of preceding tasks. The method includes:

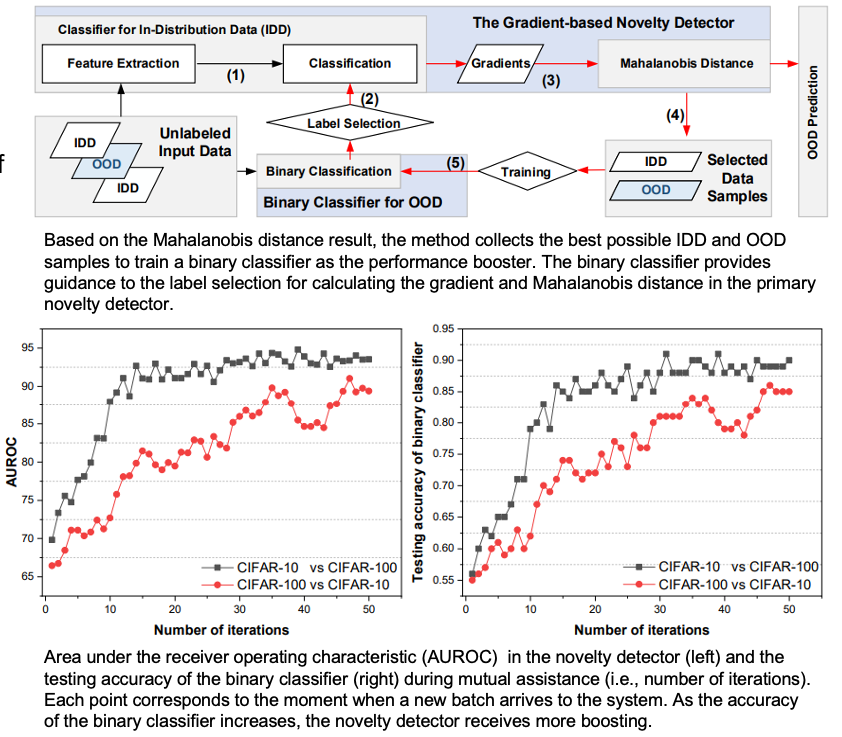

- A more robust approach of computing and evaluating the Mahalanobis distance of the gradients between the OOD data and in-distribution data (IDD) samples

- A self-supervised binary classifier to guide the label selection process to improve the effectiveness of Mahalanobis distance computation

The Impact

- This method consistently achieves higher accuracy than other unsupervised and supervised methods. For the CIFAR-10 dataset, the method achieved 91% accuracy. Previous state-of-the art solutions performed at 84% accuracy.

- The method is actively being explored as a component to improve the accuracy of one-class continual learning.

Team Members: Frank Liu, Jiaxin Zhang (ORNL), Mahantesh Halappanavar (PNNL), Jingbo Sun, Li Yang, Deliang Fan, Yu Cao (Arizona State University)

Publication(s) for this work:

- J. Sun, L. Yang, J. Zhang, F. Liu, M. Halappanavar, D. Fan, and Y. Cao, “Gradient-based Novelty Detection Boosted by Self-supervised Binary Classification.” AAAI conference on Artificial Intelligence, February 22-March 1, 2022

Summary

Continual learning is a branch of machine learning for sequentially learning new tasks without forgetting the learned knowledge from preceding tasks. Novelty detection aims to automatically identify the OOD samples, which do not lie within the distribution of the training data, or IDD. The Data-Driven Decision Control for Complex Systems project team developed a self-supervised method to improve the accuracy of detection of OOD data samples. The key insight is that, in a pretrained deep neural network, there is sufficient information in the network’s gradients when ODD samples are present. The new approach achieves novelty detection by computing the Mahalanobis distance between the network gradients with assistance from a self-supervised binary classifier. Extensive evaluation on the CIFAR-10, CIFAR-100, SVHN, and ImageNet datasets demonstrates that the proposed approach consistently outperforms state-of-the-art supervised and unsupervised methods in the AUROC.

Acknowledgement of Support

This material was based upon work supported by the U.S. Department of Energy, Office of Science, Office of Advanced Scientific Computing Research, Applied Mathematics program under contract and award number KJ0401 at Oak Ridge National Laboratory (ORNL) and under contract and award number 76815 at Pacific Northwest National Laboratory (PNNL). ORNL is operated by UT- Battelle, LLC., for DOE under Contract DE-AC05-00OR22725. PNNL is operated by Battelle Memorial Institute for DOE under Contract No. DE-AC05-76RL01830. The work is also partially supported by DOE under contract DE-SC0021322 with the Arizona Board of Regents for Arizona State University.