We present training approaches that proactively adapt with resource elasticity for faster convergence and more efficient use of resources. Our analysis and experiment show that out of four schedules, Schedule II achieves the best convergence. Moreover, Schedule II is about 1.6 times faster than schedule I. Schedule II is also faster than Schedule IV and uses fewer resources. Our results clearly demonstrate the advantage of proactively adapting with resource elasticity for distributed training.

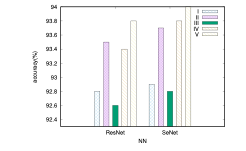

Our analysis of KAVG reveals the challenge of scaling P on convergence. We show that reduction momentum established on the series of global updates to the model accelerates convergence and improves scaling. Our experiments with ResNet-18 and SENet on CIFAR-10 show that for MAVG with μ > 0, Schedule V achieves the best convergence among the five schedules.

Citation and DOI:

Elastic distributed training with fast convergence and efficient resource utilization, 20th IEEE INTERNATIONAL CONFERENCE ON MACHINE LEARNING AND APPLICATIONS (ICMLA 21)