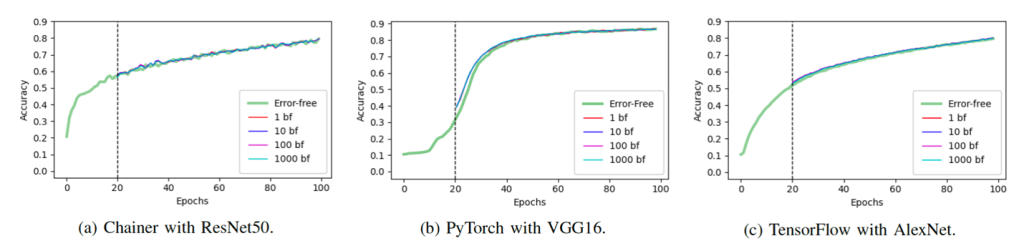

A team of researchers from Oak Ridge National Laboratory (ORNL), the Costa Rica Institute of Technology, Clemson University and the Barcelona Supercomputer Center designed, implemented and evaluated a the effects of silent data corruption (SDC) in high performance computing environments. As deep learning (DL) models continue to permeate many scientific disciplines, it becomes crucial to understand how they integrate with the remainder of the HPC ecosystem. In particular, SDC in hardware components of supercomputers has been a major concern for scientific codes. This paper leverages checkpoint alteration as a mechanism to delve into the effects of SDCs for DL models and frameworks. We create a parameterized HDF5-based checkpoint file fault injector that alters the values of a DL model by flipping bits according to several control knobs. As long as an HDF5 checkpoint file is produced, the injector works with any model and any framework without requiring any modification. This machinery was tested with three popular DL models and three main DL frameworks on Summit. We find the models are extremely resilient as long as a crucial bit in the IEEE-754 format of their values is not inverted. The results also highlight which scenarios provoke the most damage to model training and execution